Surveys are widely used across various fields, making them seem straightforward to create. However, designing an effective survey requires more than just asking questions as a poorly designed survey can lead to conflicting data, misleading conclusions, and eventually wasted research efforts. Only a well-crafted one will help uncover insights at scale, particularly in answering the "what" behind user behavior.

That said, survey design is more challenging than it appears. There’s no one-size-fits-all formula, as different research goals require different approaches. However, by following best practices and avoiding common pitfalls, you can craft surveys that yield reliable, actionable insights.

We’ll break down how to design an engaging survey as a whole and how to craft well-designed survey questions to ensure you collect meaningful, actionable insights. Lastly, we'll take a look at how we could potentially leverage AI to streamline your survey development.

Survey Tips tldr;

While designing a survey may seem simple, crafting an effective one is an art that requires creating a smooth, engaging flow and using clear, intentional language to uncover exactly what you want to learn from participants. Below are some key takeaways:

- Set clear research goals from the start: These act as your north star, especially when stakeholders begin suggesting extra questions that can dilute the study’s focus.

- Boost engagement by easing participants in: Start with simple, easy-to-answer questions to build momentum before transitioning into more complex or opinion-based ones.

- Make every question count: Each question should serve a clear purpose. This keeps your survey focused and compact.

- Be mindful of open-ended overload: If you find yourself asking too many open-text questions, consider whether a different method—like user interviews—would better suit your research needs.

- Ask about what participants did, not what they think they’ll do: People are better at recalling past behavior than predicting future actions, making behavioral questions more reliable.

Designing for an Effective Survey Design

To make the most out of your survey research, you need a clear strategy and a thoughtful overall design from the flow of the questions to how they align with your research goals. Below are a few key tips:

Define Your North Star

Creating an effective survey requires careful planning from the start. It’s essential to articulate clear research goals—what you want to measure, the hypotheses you aim to test, key characteristics of your target audience, the insights you hope to uncover, and the type of data needed to support those insights. A well-defined goal not only ensures your survey remains focused and concise but also improves the quality of the data collected.

As you refine your survey, stakeholder input can sometimes lead to ad-hoc additions—extra questions inserted to explore topics that are out of scope. While stakeholder contributions are valuable, they can also dilute the survey’s focus. This is why having a clear research goal serves as your north star—helping you push back on unnecessary additions and keep the survey aligned with its original purpose.

Survey Flow Matters

It’s tempting to jump straight to the most important questions—after all, shouldn’t we just cut to the chase and ask for the insights we need so that we don't waste people's time? Unless you're devising a simple poll asking for opinions from general audience, survey mode and structure affect participant engagement and completion rates.

A well-designed survey starts with easy, low-effort questions—think general preferences, product usage, or simple multiple-choice formats. This helps ease participants in and builds momentum before they reach more complex or opinion-based inquiries.

A gradual, structured progression keeps users engaged and minimizes drop-off rates. If you’re including open-ended text responses, save them for later, once survey respondents are already invested. Placing difficult or time-consuming questions too early can overwhelm respondents, leading to fatigue and incomplete surveys.

In short, good survey flow isn’t just about asking the right questions, but more importantly about asking them at the right time.

Make Every Question Intentional

Every question in the survey should be intentional and purposeful. Every question should serve a clear purpose, contributing directly to your research objectives. Make sure you can address the following for every question in the survey:

- What insight am I trying to gain from this question?

- How does this contribute to my overall research goals?

If you can’t answer these confidently, the question might not belong in your survey. When surveys become too long, survey response quality drops. Take a step back and evaluate whether each question is necessary.

That said, being intentional doesn’t mean cramming multiple ideas into a single question. Avoid overloaded or convoluted phrasing—keep each question clear, concise, and directly tied to your objective. A well-structured survey isn’t about asking more questions. It’s about asking the right ones in the right way.

Don’t ask questions you can find answers to

Surveys should fill in knowledge gaps—not repeat what you already know. If the data exists elsewhere, such as in analytics dashboards, CRM tools, or previous research, there’s no need to ask users for it again.

Instead, write survey questions that uncover what analytics can’t tell you. You might know the average number of logins per user, but you won’t know how often they log into competitor platforms or why they choose one service over another. Focus on probing these unknowns while keeping questions aligned with your research goals.

Refrain from asking demographic question

Demographic information often add little value when trying to understand user behavior. While factors like education level or marital status might be useful in some study context, they can feel intrusive or potentially sensitive topic if they don’t directly relate to your research goals.

Before including a demographic question, ask yourself: How will this survey data impact my analysis? If the answer is unclear, it’s best to leave it out. Instead, focus on questions that provide meaningful, actionable insights.

Demographic data are more relevant when used to screen and filter for the best-fitting survey participants. If your study requires insights from a specific user segment (e.g., small business owners, frequent travelers, or first-time buyers), demographics can help ensure you’re gathering feedback from the right audience.

- To learn more, we recommend our article on writing screener surveys.

Surveys are Quantitative Method by Nature

Surveys excel at quantitative research, gathering structured data through closed-ended questions that are easy to analyze at scale. While open-ended questions can add valuable context, they should be used deliberately to avoid overwhelming respondents manually type their responses, which also make analysis more difficult.

Before including an open-text question, make sure if it can be reframed as a multiple choice question. For example, instead of asking “What are your primary job responsibilities as a UX researcher?” as an open-ended question in a survey, provide a multiple-choice answer choices—while including an “Other” field to capture any missing responses. This allow both respondents answer quickly and data collection easier.

That said, if your research requires deeper insights into the why behind user behavior, a survey might not be the best method. In cases where you need rich qualitative data, consider other research methods, such as interviews, usability testing, card sorting, or tree testing. These methods allow for more in-depth exploration and context beyond what a survey can capture.

Consider a mixed-methods for small sample size

Surveys are most effective when they collect hundreds of responses, drawing statistical significance. But when response counts are low, relying solely on quantitative data may not provide reliable insight.

If you expect a small sample size, consider a mix of quantitative and qualitative research method by integrating a few more open-ended questions alongside structured ones. The mixed methods approach allows you to capture much rich data that explain the patterns in your soft-quant data.

Drafting Clear Survey Questions

Writing clear survey questions is both an art and a science as it involves not just about what you ask, but more on how you ask. Poorly framed questions can lead to confusion, biased responses, or unusable data.

Use the Right Question Types to Gather the Insights You Need

Insightful surveys start with thoughtful question design as each format serves a different purpose and brings in different types of data. Choosing the right type of question depends on what you're trying to learn, who you're asking, and how you'll use the data.

- We also recommend our article on comprehensive list of survey question types.

Since surveys are inherently quantitative, consider framing the questions as close-ended formats that are more suitable for scalable and structured analysis. Keep in mind that closed ended questions aim to identify and quantify the "what" factors.

Avoid Binary Yes/No Questions

Yes/no questions may seem like a quick and easy way to gather feedback, but they often lack depth and context. Take, for example, a question like: “Have you ever wanted to use this feature?” Getting "Yes" from a majority of the participants does not validate the usefulness of the feature.

Even if the participant answers “yes,” it doesn’t tell you much—what did they want, why did they want it, and when did the need arise? Without follow-up or context, you're left with a vague data point that’s hard to act on.

While binary questions are simple for participants to answer, they often result in shallow insights. A better approach is to frame questions that explore frequency, intent, or reasoning. For instance:

- “In the past month, have you found yourself needing a feature like this?”

- “How important is this feature to your workflow?”

- "Where in your current workflow would this feature fit in?"

Ask About What They Did, Not What They Think Will Do

A common mistake in surveys is asking participants how likely they are to perform a certain action in the future. For example: “How likely are you to work out using this app?”

While it may seem like a straightforward question, people are notoriously bad at predicting their future behavior. They're often overly optimistic or unintentionally generous with themselves. Just as self-fulfilling prophecy suggests, they may suddenly feel motivated in the moment and say they’ll work out more frequently—even if their actual behavior doesn't align with that sentiment.

A more accurate way to assess intent is to look at past behavior. Behavioral questions grounded in past actions are more reliable and can give you better indicators of future patterns than speculation ever will. For instance, instead of asking about future plans:

- ❌ Don't ask: "How likely are you to work out using this app?"

- ✅ Do ask: “In the past three months, how often have you worked out?”

Similarly, if you're trying to gauge the potential value of a new feature, frame the question around real scenarios. Grounding questions in past experiences leads to more reliable, actionable insights.

- ❌ Don't: “How useful is this feature?”

- ✅ Do: “When was the last time this feature could’ve helped you?”

Common Pitfalls to Avoid

Even small question wording choices can significantly affect the quality of your data. Here are a few traps to avoid:

Don’t overload your participants

Asking too many things at once, or overloading the survey with questions, easily leads to survey fatigue and low-quality responses.

- Avoid double-barreled questions: These are questions that ask about two things at once, like:

“How satisfied are you with our website and mobile app?”

The problem is that you are gauging user satisfaction on two different platforms. It is best to separate the question into two.

Watch out for leading questions and unintentional bias

Steer clear of leading questions that subtly push respondents toward a certain answer. Even if you don’t mean to lead your respondents, the wording and phrasing of a question can unintentionally lead to leading and biased questions. Certain words, assumptions, or tone can subtly nudge participants toward a particular response—skewing your data and making it less reliable.

- ❌ Don't: "How much do you love our intuitive new feature?"

The example assumes the feature is intuitive and urges the user to love it. - ✅ Do: “How would you rate your experience with the new feature?”

This version keeps it neutral and allowing for a wider range of honest feedback.

Other common signs of biased or leading questions include:

- Assumptive language: “How helpful was our customer support?” assumes the experience was helpful.

- Emotionally charged words: “How amazing did you find our onboarding process?”

To keep your data clean, stick to neutral, objective phrasing that lets users share their honest thoughts without being steered.

Avoid jargons and emotive words

To keep your survey unbiased and easy to understand, avoid technical jargon and strong emotive words. Using plain, neutral language ensures that survey respondents interpret your questions consistently—and aren't subconsciously influenced by the question wording.

Certain keywords or industry-specific terms can unintentionally guide how people respond or create confusion if they're not familiar with the terminology. As you draft every question, keep the target population in mind.

Before publishing your survey, always go for a test drive to carefully review each question either by yourself or with your teammates. Look for any terms that might sound too promotional, complicated, or emotionally loaded, and replace them with clear, objective alternatives. It’s a small step that can make a big difference in data quality.

Leveraging AI to Design Surveys

With rapidly evolving AI landscape and survey software incorporating AI, there’s a growing opportunity to make the survey-building process more efficient and streamlined. While AI-generated content still requires human oversight, it offers a powerful starting point—helping you draft questions, structure flow, and reduce setup time.

AI won’t replace the entire survey workflow, but it can significantly accelerate and enhance key steps, allowing you to focus more on strategy and insight rather than manual setup. We also recommend our section on Best Practices for Using AI in Research.

On Prompt Engineering: What You Feed is What You Get

Using generative AI involves how effectively you communicate with it. This is where prompt engineering becomes essential. The quality of the AI’s output is only as good as the input it receives. Crafting clear, specific, and goal-oriented prompts allows you to guide the AI toward generating relevant, unbiased, and insightful survey content.

Whether you're brainstorming research questions, refining question wording, or exploring different ways to phrase a concept, well-structured prompts help AI understand the context and intent behind your request. In short, prompt engineering bridges the gap between your research expertise and the AI’s capabilities.

At the end of the day, prompt engineering is a way of guard-railing the AI to properly provide relevant outputs. The more detailed information about your study context, background, learning objectives, participant criteria, and more, the more relevant output you will get.

As AI development and its landscape are changing everyday, its integration into the research workflow should be approached thoughtfully. That said, here are a few key areas where AI can be effectively leveraged in the survey development.

Brainstorming and Drafting a Study Plan with AI

An effective survey starts with deliberate planning. Before drafting the actual study, it's important to clearly define the context of your study, identify knowledge gaps, and outline your learning objectives. Think about what you need to uncover, who you need to hear from, the type of participant pool to inform your research questions, and how you’ll structure the survey to collect data that leads to meaningful, actionable insights.

This is where AI can be a valuable thinking partner. In the early, exploratory phase of planning, AI is especially useful for brainstorming and idea generation. Whether you're mapping out potential themes, identifying assumptions to test, or exploring different angles of a research question, AI can help you diverge and expand your perspective. Once you've gathered initial ideas, you can use AI to help refine your study plan, suggest relevant question types, or even flag potential blind spots.

While AI won’t replace the human expertise required for sound research strategy, it can help you move faster and think broader—making the early stages of survey design more dynamic and collaborative.

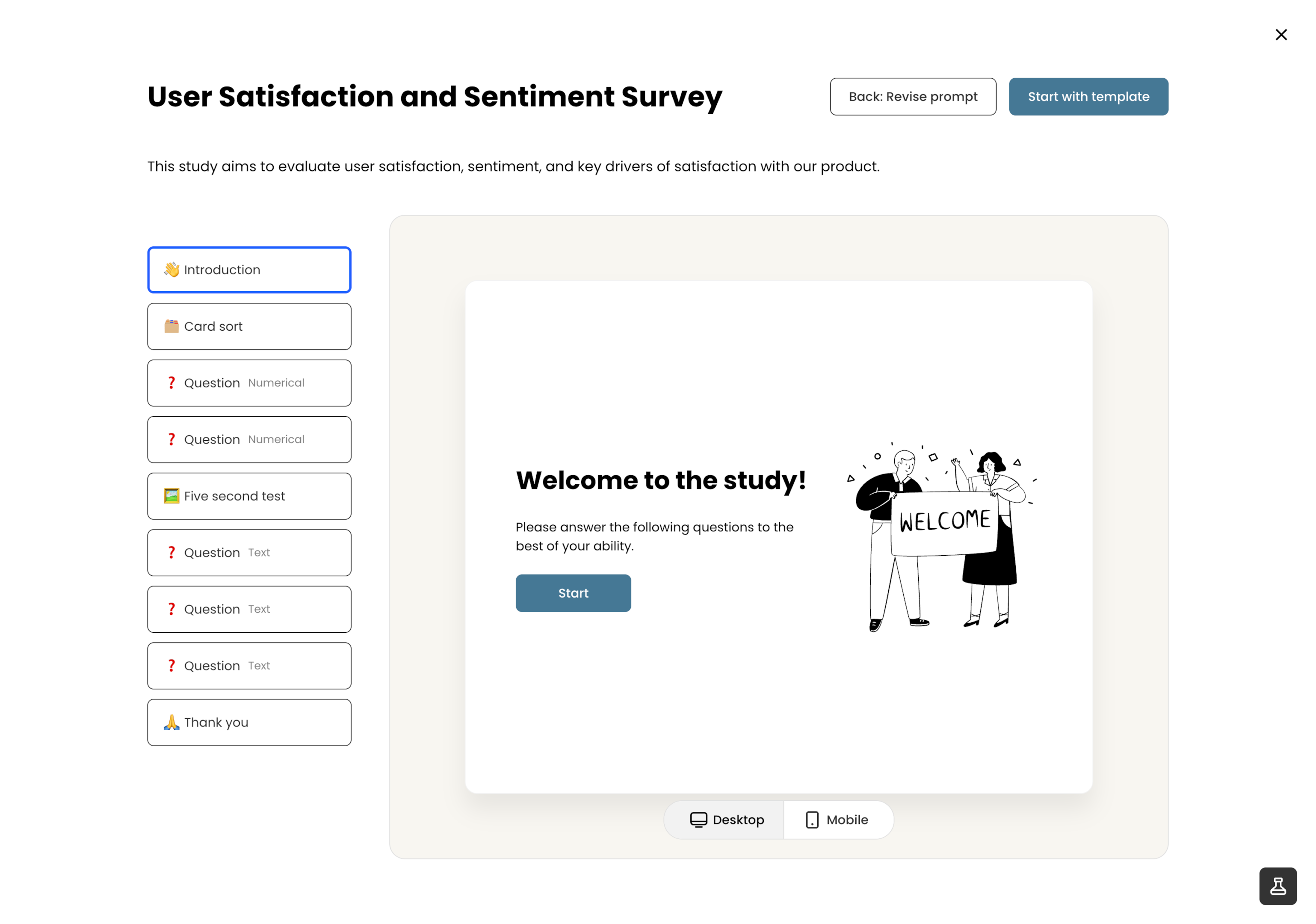

Generating Study with AI

Once you’ve clearly defined and communicated your survey goals along with any context, target audience, and research objectives, you can start leveraging AI to generate relevant survey template with the appropriate flow and structure that are designed to yield more reliable data. The clearer your input, the more aligned the AI’s output will be with your intent.

To further improve the quality of AI-generated survey questions, you can include additional instructions. For example, specify what to avoid (e.g., leading language, jargon, or double-barreled questions), or describe the tone or style you want—whether that’s professional, conversational, or neutral. You can also guide the AI on how to frame certain types of questions (e.g., focusing on past behavior rather than future intent). Below is a snippet of prompt we use for AI-assisted study experience:

# Specify role

You are an experienced UX researcher assistant creating questions for a survey. Based on user inputs, devise effective series of questions.

# Design of the study

The format of each question is either open-ended text response, single-select, multi-select, or numerical scale questions.

# Notes and guidelines for creating the output

- Avoid asking yes/no binary questions. Perhaps asking questions beginning with “what” or “how” is helpful.

- Avoid leading questions and strong emotive words in the questions.

Rephrasing Questions with AI

Once your initial survey draft is complete, you can further utilize AI to rephrase each question. While the AI can support overall survey creation, diving into each question individually allows for more precise editing and quality control that might be missed at the broader study level.

- Rewrite confusing or biased questions, ensuring they are clear, neutral, and easy to understand.

- Detect and flag leading questions—common pitfalls that can skew survey results or confuse respondents.

- Adapt the tone of each question to match your audience, whether you're aiming for a professional, casual, or neutral voice.

Streamlining Survey with Hubble AI

Hubble seamlessly integrates AI across multiple touchpoints to help you streamline your research process and save research turnover.

With Hubble’s AI-powered study generation, you can create highly contextual and relevant surveys from just a few inputs. The system is trained on real-world research questions and best practices, blending the power of AI with the expertise of seasoned researchers to deliver survey templates that are practical, focused, and research-ready.

The Rephrase with AI feature allows for a detailed inspection of each survey question. To improve both trust and transparency, Hubble not only suggests edits—it also explains why the change was made and how it improves the question. This way, you're not just getting faster output, but you’re also getting higher-quality, more credible surveys, backed by both machine intelligence and research intent.